MambaLLIE: Implicit Retinex-Aware Low Light Enhancement with Global-then-Local State Space

NeurIPS 2024

- Jiangwei Weng

- Zhiqiang Yan

- Ying Tai

- Jianjun Qian

- Jian Yang

- Jun Li*

- 1. Nanjing University of Science and Technology 2. Nanjing University

The effectiveness of our method on LLIE Video Enhancement

Abstract

Recent advances in low light image enhancement have been dominated by Retinex-based learning framework, leveraging convolutional neural networks (CNNs) and Transformers. However, the vanilla Retinex theory primarily addresses global illumination degradation and neglects local issues such as noise and blur in dark conditions. Moreover, CNNs and Transformers struggle to capture global degradation due to their limited receptive fields. While state space models (SSMs) have shown promise in the long-sequence modeling, they face challenges in combining local invariants and global context in visual data. In this paper, we introduce MambaLLIE, an implicit Retinex-aware low light enhancer featuring a global-then-local state space design. We first propose a Local-Enhanced State Space Module (LESSM) that incorporates an augmented local bias within a 2D selective scan mechanism, enhancing the original SSMs by preserving local 2D dependencies. Additionally, an Implicit Retinex-aware Selective Kernel module (IRSK) dynamically selects features using spatially-varying operations, adapting to varying inputs through an adaptive kernel selection process. Our Global-then-Local State Space Block (GLSSB) integrates LESSM and IRSK with layer normalization (LN) as its core. This design enables MambaLLIE to achieve comprehensive global long-range modeling and flexible local feature aggregation. Extensive experiments demonstrate that MambaLLIE significantly outperforms state-of-the-art CNN and Transformer-based methods.

Method

The overall pipeline of the proposed MambaLLIE. Our Global-then-Local State Space Block (GLSSB) integrates Local-enhanced state space module (LESSM) and implicit Retinex-aware selective kernel module (IRSK) with layer normalization as its core, where the maximum and mean maps of low light images can be regarded as a rough illumination prior of GLSSB. Besides, The local-enhanced design essentially introduces the local invariance into state space model, which can integrate the existing directional scan with our local-enhanced term into state space.

Effective Receptive Field

The Effective Receptive Field (ERF) visualization for SNR-Net, Retinexformer, MambaIR and our MambaLLIE. A broader distribution of bright areas signifies a larger ERF. The receptive field of SNR-Net is large but messy, due to the SNR-aware mechanism, Retinexformer achieves a larger receptive field of the central point, and MambaIR has the global receptive field, but presents the limited local perception. Only our proposed MambaLLIE achieves a global perception ability outwards from central point and preserves the large local receptive field.

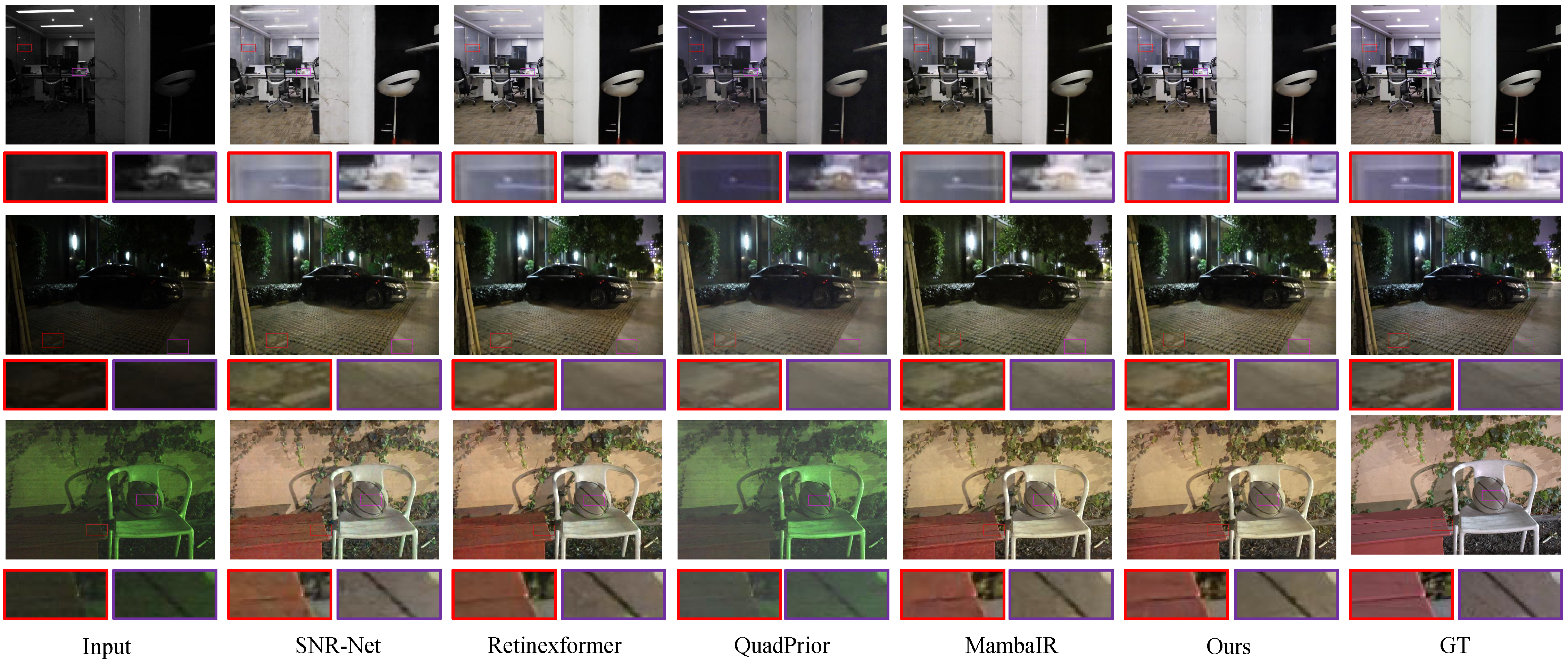

Qualitative comparison with previous methods on LOL-V2-real and LOL-V2-syn datasets. Our MambaLLIE effectively enhances the illumination and preserves the color.

Qualitative comparison with previous methods on SMID, SDSD-indoor and SDSD-outdoor datasets. Our MambaLLIE restore the texture and color under challenging degradation, such as the wooden bench and reflective glass.

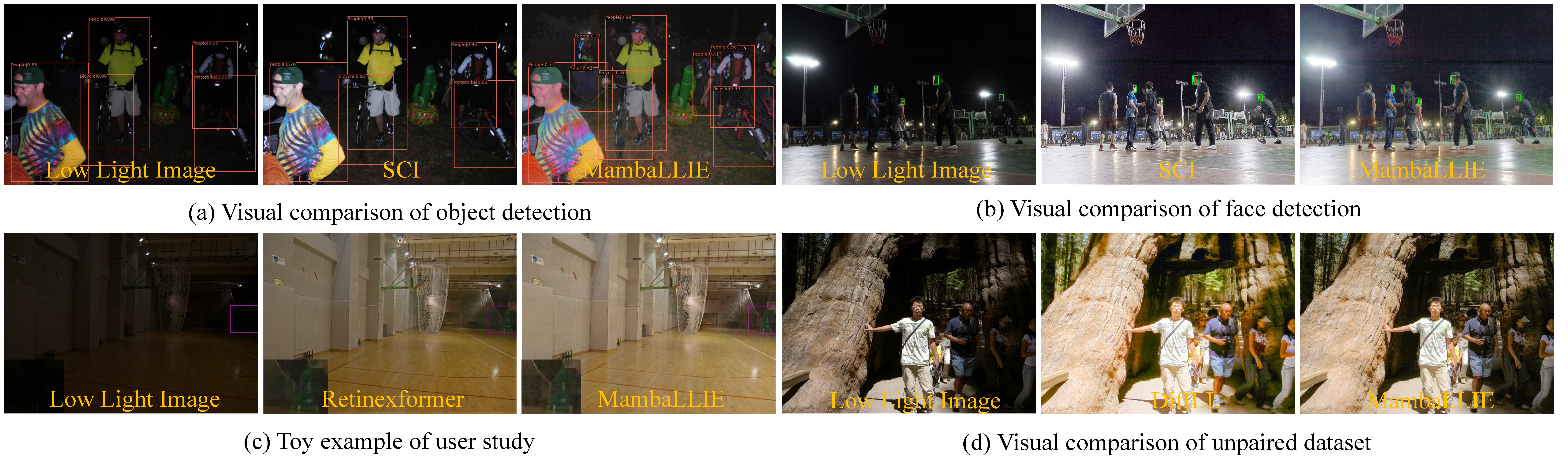

Visual comparison of our MambaLLIE with recent SOTA methods. (a) Qualitative comparison on object detection, (b) Qualitative comparison on face detection, (c) Toy example of user study, (d) Qualitative comparison of unpaired dataset.

More qualitative comparisons with SOTAs. (Zoom in for best view)

Citation

If you find our work useful in your research, please consider citing:

Acknowledgements

The website template was borrowed from Michaël Gharbi and Junkai Fan.